vLLM (Very Large Language Model) is an open-source library developed by UC Berkeley, designed to provide fast and easy inference and serving for large language models (LLMs). vLLM utilizes the PagedAttention algorithm to manage attention keys and values efficiently, achieving higher throughput compared to other libraries like HuggingFace Transformers and HuggingFace Text Generation Inference (TGI).

Key Features of vLLM

- PagedAttention: A new attention algorithm that manages attention key and value memory efficiently, allowing storage of continuous keys and values in non-contiguous memory space.

- High Performance: vLLM offers up to 24x higher throughput than HuggingFace Transformers and 3.5x higher than TGI.

- Flexibility: Seamless integration with popular models on Hugging Face, supporting decoding algorithms like parallel sampling and beam search.

- Multi-platform Support: Supports NVIDIA GPUs, AMD CPUs and GPUs, Intel CPUs and GPUs, and PowerPC CPUs.

- Memory Sharing: Efficient memory sharing in complex sampling algorithms like parallel sampling and beam search, reducing memory costs by up to 55%.

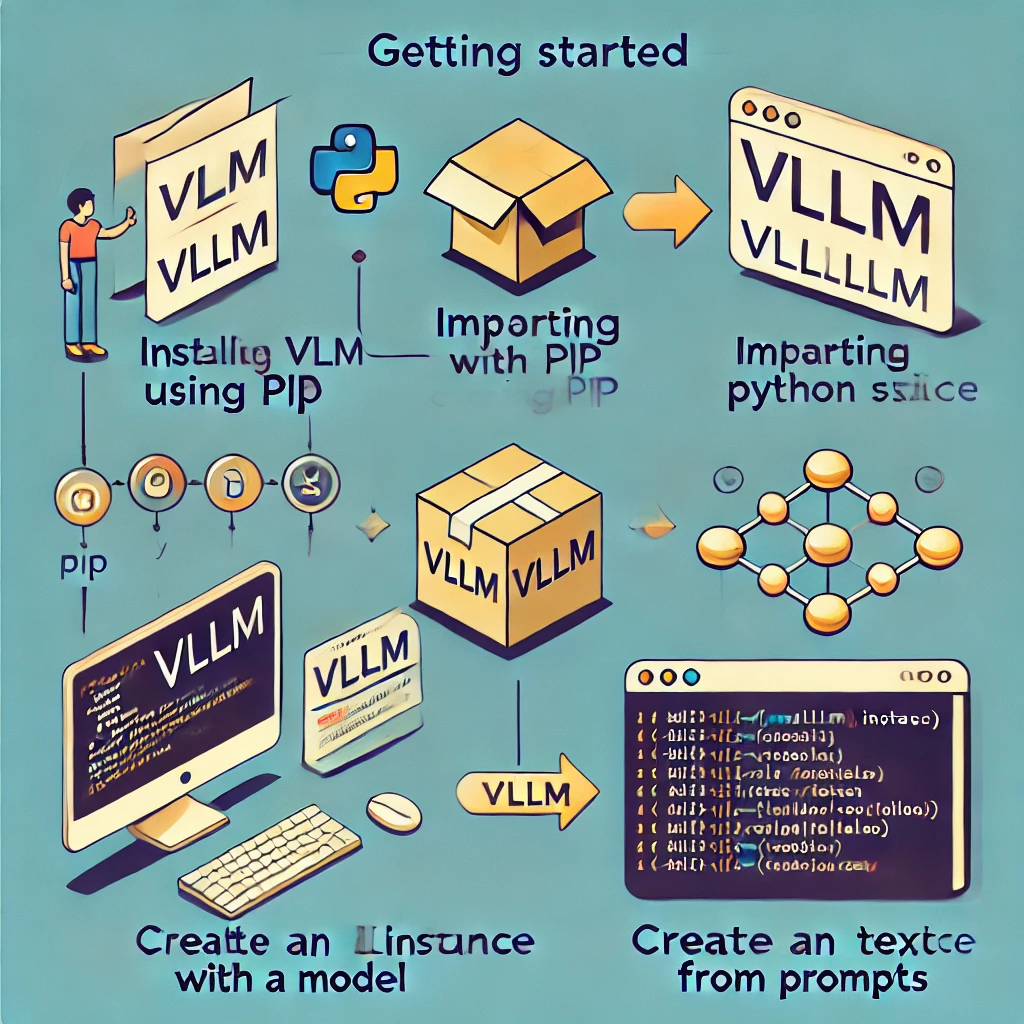

Detailed Guide to Installing and Using vLLM

1. Prerequisites

Before installing vLLM, ensure you have the following prerequisites:

- Python 3.7 or higher: vLLM requires Python 3.7+. You can download the latest version of Python from the official Python website.

- pip: Ensure you have pip installed. Pip is a package manager for Python. You can check if pip is installed by running:

pip --versionCUDA and cuDNN: If you plan to use vLLM with NVIDIA GPUs, ensure that CUDA and cuDNN are installed and properly configured.

2. Installing vLLM

You can install vLLM using pip. Here’s a step-by-step guide:

- Create a Virtual Environment (optional but recommended):Creating a virtual environment helps manage dependencies and avoid conflicts with other Python packages. You can create a virtual environment using

venv:

python -m venv vllm_envActivate the virtual environment:

- On Windows:

vllm_env\Scripts\activate- On macOS and Linux:

source vllm_env/bin/activate- Install vLLM:

With the virtual environment activated (if you chose to create one), install vLLM using pip:

pip install vllm3. Getting Started with vLLM

Offline Inference:

To use vLLM for offline inference, you can import the library and use the LLM class in your Python scripts:

from vllm import LLM

prompts = ["Hello, my name is", "The capital of France is"] # Sample prompts.

llm = LLM(model="lmsys/vicuna-7b-v1.3") # Create an LLM.

outputs = llm.generate(prompts) # Generate texts from the prompts.Online Serving:

To use vLLM for online serving, you can start an OpenAI API-compatible server:

python -m vllm.entrypoints.openai.api_server --model lmsys/vicuna-7b-v1.3Then, you can send requests to the server using curl:

curl http://localhost:8000/v1/completions \

-H "Content-Type: application/json" \

-d '{

"model": "lmsys/vicuna-7b-v1.3",

"prompt": "San Francisco is a",

"max_tokens": 7,

"temperature": 0

}'4. Recent Updates and Progress

The Future of vLLM is Open:

vLLM has started the incubation process into LF AI & Data Foundation, ensuring open and transparent ownership and governance. The license and trademark will remain irrevocably open, guaranteeing continuous maintenance and improvement.

Performance is a Top Priority:

The vLLM contributors are dedicated to making vLLM the fastest and easiest-to-use LLM inference and serving engine. Recent advancements include:

- Publication of Performance Benchmarks: A performance tracker at perf.vllm.ai to monitor enhancements and regressions.

- Development of Highly Optimized Kernels: Integration of FlashAttention2 with PagedAttention and FlashInfer, and plans for FlashAttention3.

- Quantized Inference: Development of state-of-the-art kernels for INT8 and FP8 activation quantization, and weight-only quantization for GPTQ and AWQ.

Addressing Overheads:

Several efforts are underway to reduce critical overheads:

- Asynchronous Scheduler: Working on making the scheduler asynchronous for models running on fast GPUs (H100s).

- Optimizing API Frontend: Isolating the OpenAI-compatible API frontend from the critical path of the scheduler and model inference.

- Improving Input and Output Processing: Vectorizing operations and moving them off the critical path for better scalability with data size.

5. Applications of vLLM

- Chatbots and Virtual Assistants: Integrate into chatbot systems to provide accurate and quick responses.

- Automated Translation: Translate texts from one language to another automatically and accurately.

- Content Creation: Write articles, create digital content, and script for films or TV shows.

- Sentiment Analysis: Understand and analyze sentiments in texts, enhancing customer service and other applications.

6. Supporting Organizations

vLLM is a community project supported by organizations such as:

- a16z

- AMD

- AWS

- Dropbox

- NVIDIA

- Roblox

vLLM welcomes contributions and collaborations from the community. For more information on how to contribute, refer to the CONTRIBUTING.md file in the vLLM GitHub repository. For support or to report issues, join the vLLM community on Discord or use GitHub Issues.

Conclusion

vLLM is a powerful and flexible library for deploying and serving large language models. With its easy integration, high performance, and strong community support, vLLM is set to become a key tool for AI applications. Whether you are working on chatbots, content creation, or automated translation, vLLM provides the infrastructure needed to deliver efficient and scalable solutions.

FAQs about vLLM

What is vLLM?

vLLM (Very Large Language Model) is an open-source library developed by UC Berkeley for fast and easy inference and serving of large language models (LLMs).

What are the benefits of PagedAttention?

PagedAttention optimizes memory usage and increases throughput by efficiently managing attention key and value memory.

What hardware does vLLM support?

vLLM supports NVIDIA GPUs, AMD CPUs and GPUs, Intel CPUs and GPUs, and PowerPC CPUs.

Can I use vLLM with Hugging Face models?

Yes, vLLM seamlessly integrates with popular models on Hugging Face.

What is the performance advantage of vLLM?

vLLM delivers up to 24x higher throughput compared to HuggingFace Transformers.

Who supports the development of vLLM?

vLLM is supported by organizations like UC Berkeley, Anyscale, AWS, Databricks, IBM, and more.

How can I contribute to vLLM?

You can contribute by submitting issues or pull requests on the vLLM GitHub repository and joining the community on Discord.