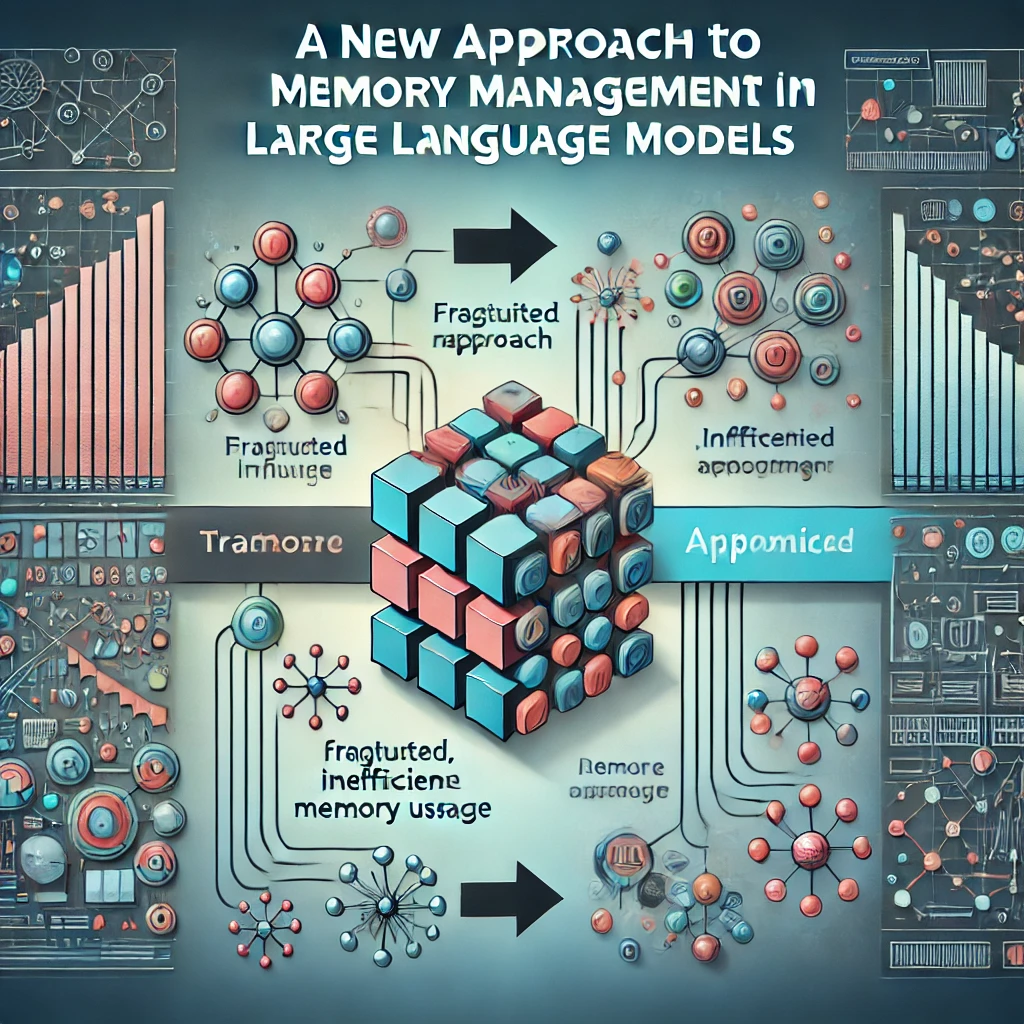

Efficient Memory Sharing in Complex Sampling Algorithms

In the realm of large-scale AI deployments, particularly in the execution of complex sampling algorithms like parallel sampling and beam search, memory efficiency plays a crucial role. These algorithms are essential in generating high-quality outputs from large language models (LLMs) and other AI systems. However, as the size and complexity of AI models grow, the […]